Fast 🔥

Legend-State is the result of years of iteration and dozens of experiments and rewrites to build the fastest possible state system. The primary reason it's so fast is that it optimizes for the fewest number of renders - components are only re-rendered when the state they truly care about is changed.

It may seem silly to quibble over milliseconds, but state is a hot path of most applications, so it's important that it be as fast as possible to keep your whole application snappy. In our case, some Legend users have hundreds of thousands of items flowing through state, so it became the core bottleneck and is very important to optimize.

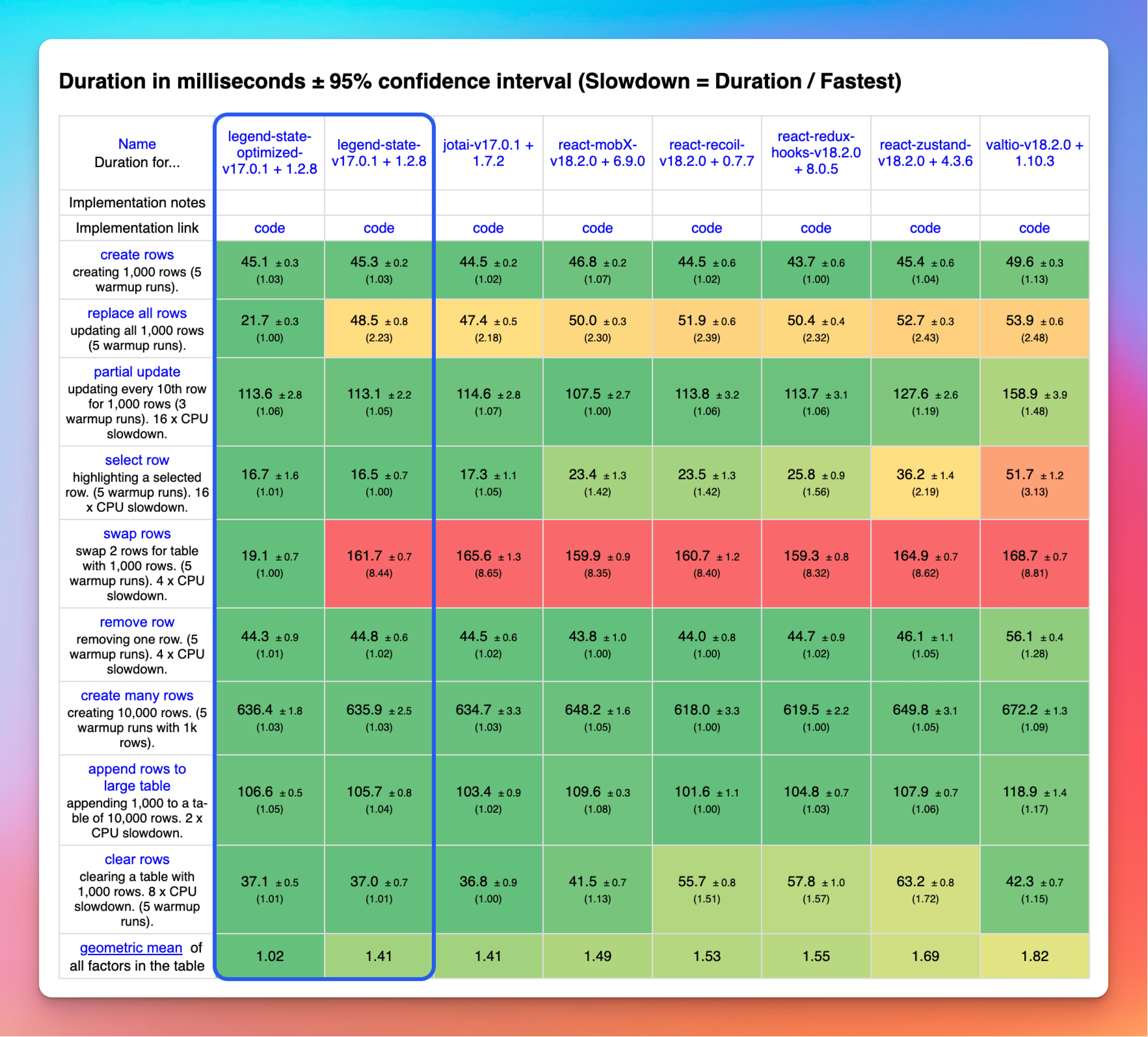

We'll show results of the popular krausest benchmark and use that to describe why Legend-State is so fast. This benchmark is a good approximation of real-world performance, but the most important optimization in Legend-State is that it just does less work because it renders less, less often.

Benchmark

Legend-State's optimized mode (on the left) optimizes for rendering each row when it changes, but not the entire list, which is reflected in the fast partial update and select row benchmarks. That typically incurs an extra upfront cost to set up the listeners in each row, but Legend-State is so optimized that even so it's actually still among the fastest in the create many rows benchmark.

Legend-State really shines in the replace all rows and swap rows benchmarks. When the number of elements is unchaged, it does not need to re-render the list and can only render the individual rows that changed. That brings a big speed improvement for drag/drop or when items are moved around in a list.

You can opt into the fast array rendering with the optimized prop on the For component. Note that this optimization reuses React nodes rather than replacing them as usual, so it may have unexpected behavior with some types of animations or if you are modifying the DOM externally. For that reason the benchmark considers usage of the optimized props as "non-keyed".

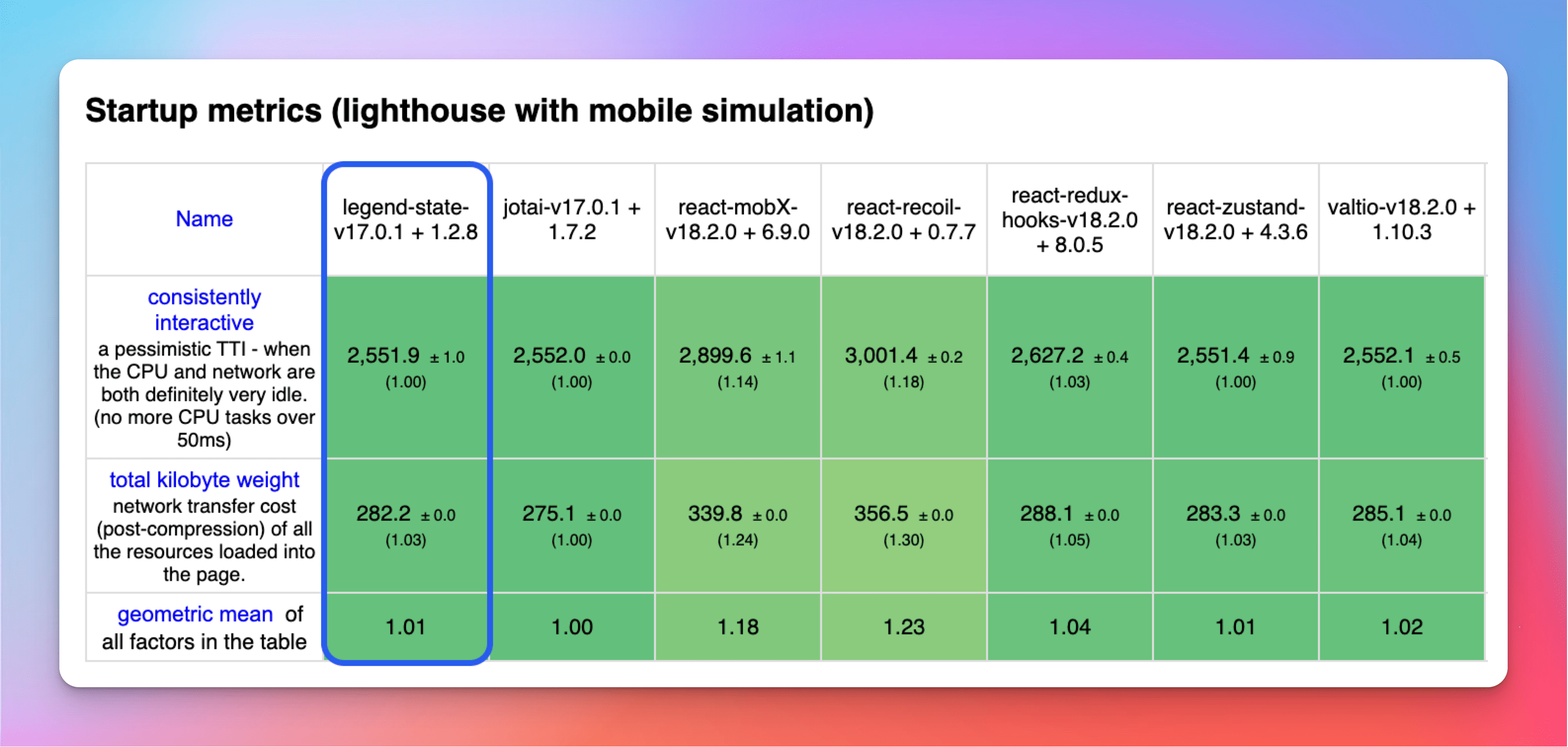

Startup metrics

In these benchmarks you can see that Legend-State has the fastest TTI (time to interactive) because Legend-State doesn't do much processing up front. Creating observables and adding thousands of listeners does very little work. Because observables don't have to modify the underlying data at all, creating an observable just creates a tiny amount of metadata.

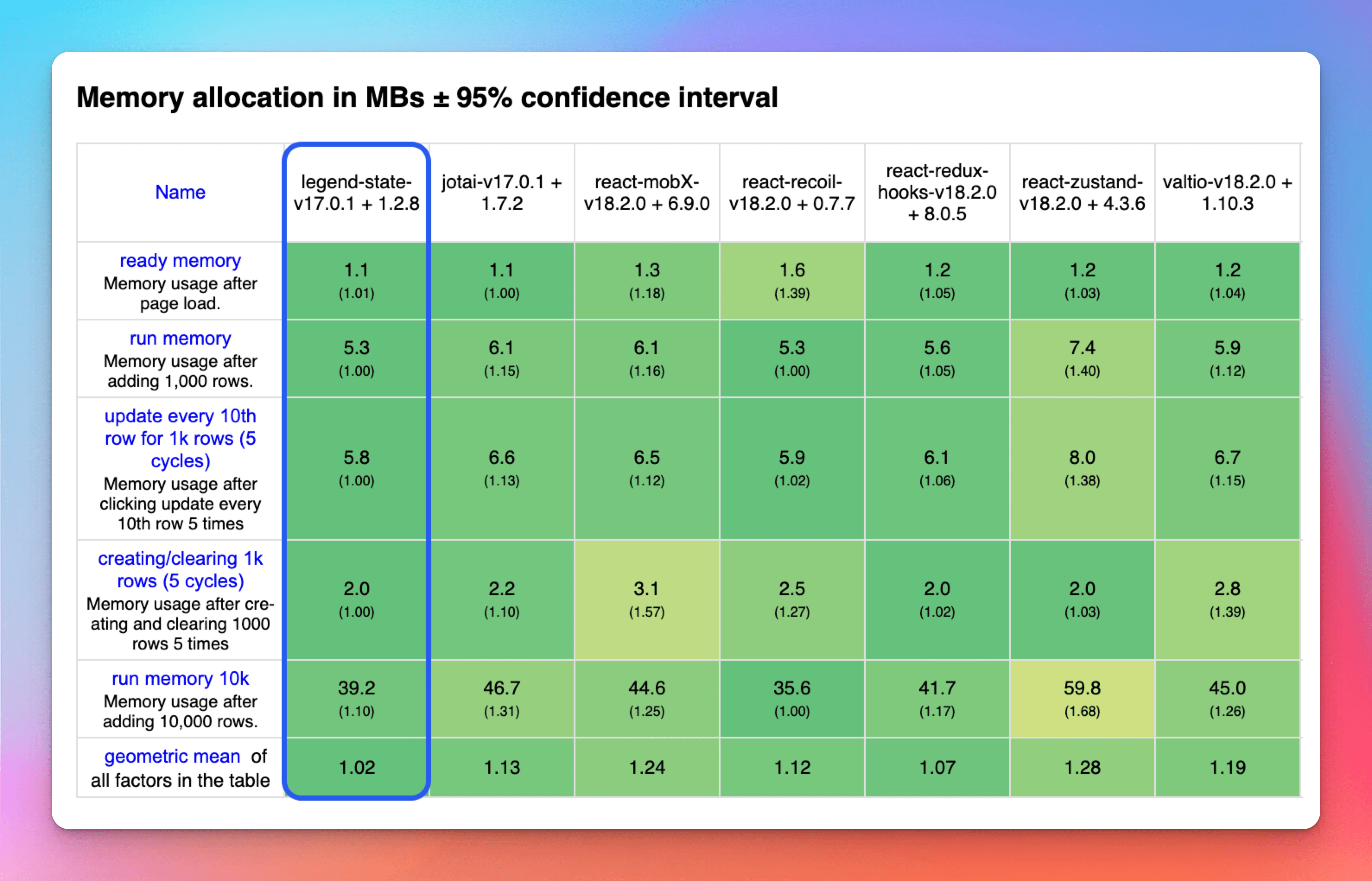

Memory

The memory usage is lower than the others because Legend-State does not modify the underlying data or keep a lot of extra metadata, and it does not create many objects or closures.

Why it's fast

Legend-State is optimized in a lot of different ways:

Proxy to path

Legend-State uses Proxy, which is how it exposes the observable functions (get/set/listen etc...) on anything within an observable object. But it differs from other Proxy-based systems by not touching the underlying data all. Each proxy node represents a path within the object tree, and to get the value of any node it traverses the raw data to that path and returns the value. So every node within the state object stores minimal metadata, and never has to modify or clone the underlying data, which keeps object creation to a minimum and memory usage down.

Proxying by path also enables some really interesting list optimizations: in the For component's optimized mode, the Proxy object for the observable references an index in the array. So when array elements rearrange, the existing Proxy nodes can be updated to point to their new indices, and we can render only the changed/ moved elements and skip rendering the full array.

Listeners at each node

Each node keeps a Set of listeners so that you can listen to changes to any value anywhere within the state. This is great for performance because changes only call the few listeners that are affected by that change. JavaScript's Set provides nice benefits here as its uniquenesss constraint ensures callbacks are added only once, and removing listeners is an instant O(1) operation.

Granular renders

Extensive care is taken to ensure that components are rendered only when their state truly changes. Legend-State provides functions to be extra specific about when it tracks changes and useValue to isolate a tracking computation to return one value. The best thing for your app's performance is to render less, less often.

Easy fine-grained reactivity

Legend-State has built-in helpers to easily extract children so that their changes do not affect the parent. This keeps large parent components from rendering often just because their children change.

Shallow listeners

Shallow listeners are called on objects only when keys are added or removed, but not when children are changed. This lets the child components manage their own rendering and large parent components don't need to render.

Array optimizations

Optimizing list rendering is a primary goal because Legend-State is built for Legend and its huge documents and lists, so it aims to render parent list components as little as possible. When changes to an array only modify children or transpose elements, and do not add or remove elements, it can render only the changed elements and skip rendering the parent list. See Arrays for more details.

Minimal closures and object creation

While other state libraries create lots of new closures and objects for each observable, Legend-State is careful to keep it to a minimum. The observable functions are created only once so there is little cost to creating tons of observables.

No boilerplate

Because Legend-State's api is very terse and require no boilerplate code, your apps don't need to be filled with tons of extra boilerplate code. So your apps are smaller and faster because you're shipping smaller files to your users.

Micro-optimizations

Beyond the architecture optimization, Legend-State also does a lot of micro-optimizations which don't necessarily have a huge effect on their own, but it all adds up.

- For loop vs. forEach: For loops are still quite a bit faster than

forEachand don't involve creating closures, so for loops are always favored. - Set vs. array: Compared to an array,

Sethas a marginal creation cost, but the uniqueness constraint and fast element removal end up making it overall faster for managing Listeners than arrays. - Map vs. object:

Maphas a marginal creation cost compared to an object, but its operations are generally faster, so it is used for all the caching and comparing changing arrays. - Closures vs. bind: Closures are surprisingly much faster than

bind, so Legend-State favors creating small closures when needed. - isNaN is slow: This surprised us, but

isNaNwas causing significant slowdown.+n - +n < 1is a much faster way to check if a string is a number. Source - Overloading Object prototype is a no-no: We tried extending the prototype of the built-in

Objectbut that caused a huge slowdown across the board, so that's no good. - Proxy vs. Object.defineProperty: We also tried using

Object.definePropertyto add properties to objects, but Proxy is much faster. - Cloning is slow: Change handlers have a

getPrevious()function to opt-in to computing the previous state because cloning objects unnecessarily was wasteful. - for of in Set/Map:

for ofloops are the fastest way to iterate through Set and Map values.